Regularised positive/negative part

The positive and negative part of a scalar are two functions defined as

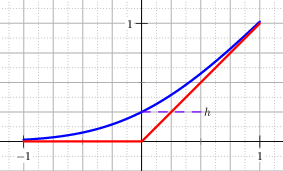

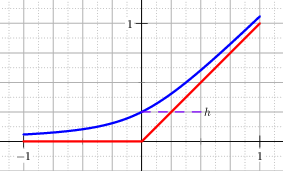

The corner at \(x=0\) is smoothed with the approximation, see Figure The graph of the regularised positive part (blue) and he exact positive part (red). The parameter h of this example is h=0.25.:

The regularised negative part is obtained by symmetry and is \(x^-_h \DEF -x^+_h(-x)\).

Fig. 9 The graph of the regularised positive part (blue) and he exact positive part (red). The parameter \(h\) of this example is \(h=0.25\).

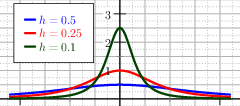

The derivatives of this function are smooth up to the second derivative, see Figure The graph of the first and second derivative of the regularised positive part..

Fig. 10 The graph of the first and second derivative of the regularised positive part.

The explicit expressions of the two derivatives are:

Regularised positive/negative part with erf

Another technique to regularise the positive (respectively negative) part function is to approximate it with the Error Function, erf for short, which is defined as the integral of a standard Gaussian,

It is possible to scale the erf function to match the function \(x^+\).

This is done imposing that the regularised function, call it \(x^+_{\erf}\) for commodity,

has a small value \(h\) at \(x=0\), that is \(x^+_{\erf}(0)=h\).

It is convenient to introduce an auxiliary parameter \(\kappa\DEF\frac{1}{2h\sqrt{\pi}}\), hence the function

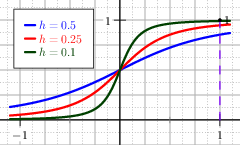

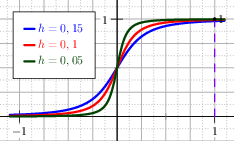

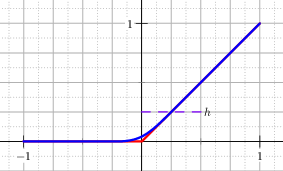

meets the requirements, see Figure The graph of the regularised positive part with the Error Function and its first and second derivative.. In fact for \(x=0\) it evaluates to \(h\). The function is clearly smooth, and its first two derivatives are:

Fig. 11 The graph of the regularised positive part with the Error Function and its first and second derivative.

Regularised positive/negative part with Sin Atan

A typical function that is used to approximate other functions is the inverse tangent function, here it is composed with the sinus function to match the positive part function. The control parameter is \(h\) (with \(\kappa=2h\)) and the approximation is:

The derivatives are smooth and are given by:

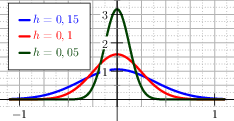

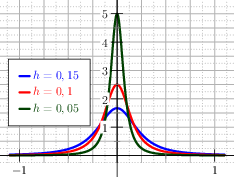

The parameter \(h\) controls the value of the function \(x^+_{\mathrm{sa}}\) at \(x=0\) so that \(x^+_{\mathrm{sa}}(0)=h\). The plot of the function with its derivatives is shown in Figure The graph of the regularised positive part with the SinAtan function and its first and second derivative..

Fig. 12 The graph of the regularised positive part with the SinAtan function and its first and second derivative.

Regularised positive/negative part with Polynomials

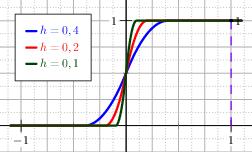

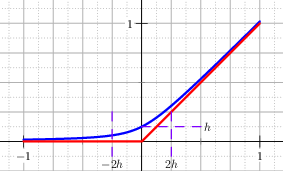

One of the most intuitive ways to avoid the corner at \(x=0\) with a rounded curve is to smoothly join the line \(y(x)=0\) for negative \(x\) with the positive part \(y(x)=x\) with a polynomial. The minimal degree necessary to have continuous derivatives up to second order is three. If the required parameter \(h\) is chosen such that \(x^+_{\mathrm{poly}}(- h)=0\) and \(x^+_{\mathrm{poly}}(h)=h\), then the approximating function is, see Figure The graph of the regularised positive part with the polynomial regularisation. In red the analytic positive part.

Fig. 13 The graph of the regularised positive part with the polynomial regularisation. In red the analytic positive part.

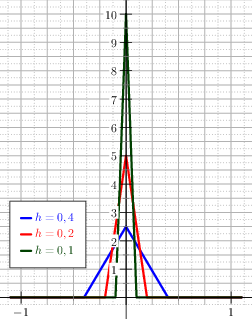

It is easy to compute the first two derivatives, that are respectively, see Figure The graph of the derivatives of the positive part with the polyonomial regularisation.

Fig. 14 The graph of the derivatives of the positive part with the polyonomial regularisation.